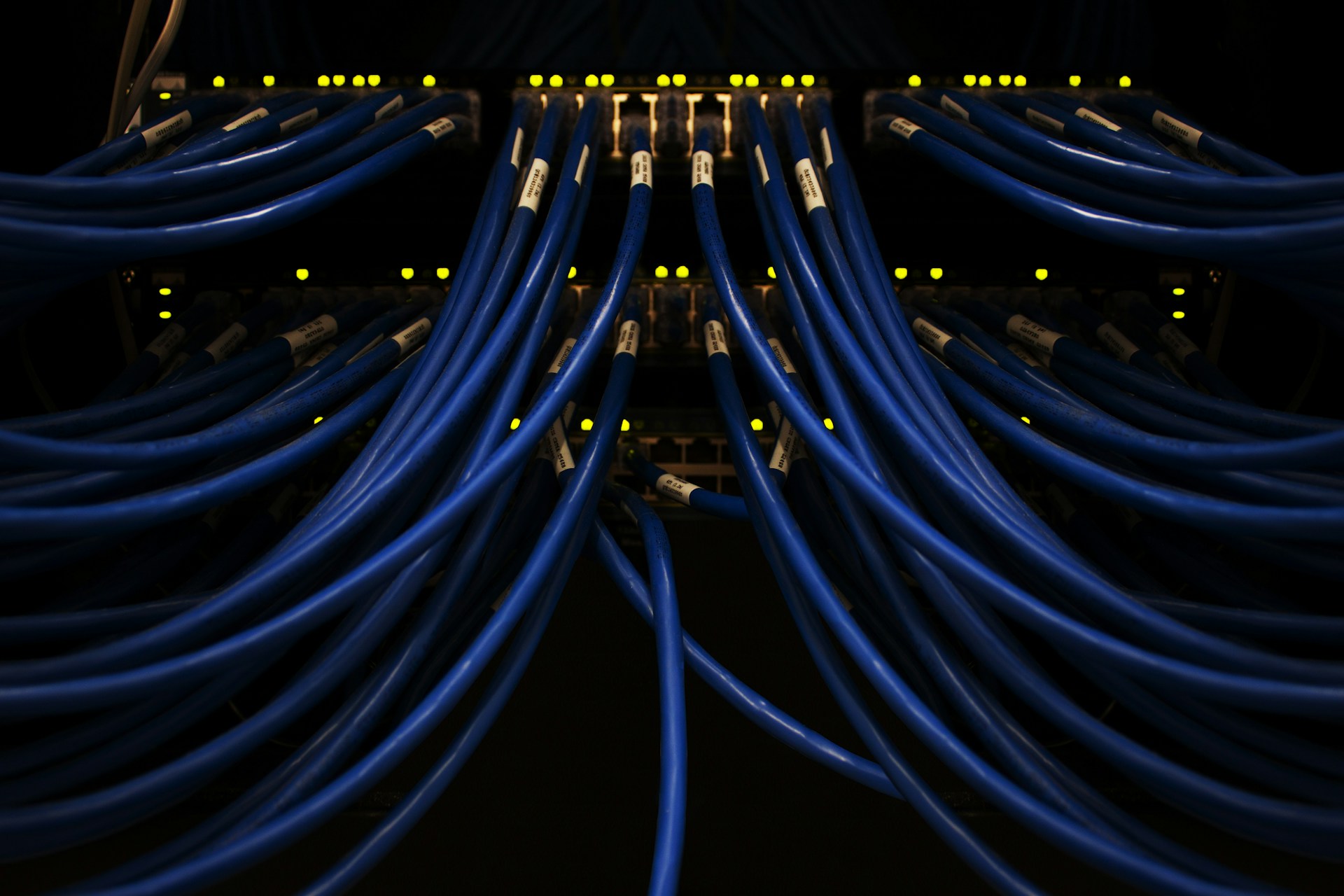

You know that phrase, "the cloud is just somebody else's computer?"

Some company racked and wired a server farm, which you're accessing through API calls.

The same holds for genAI: "that genAI LLM is just somebody else's model."

Some company trained the model, and you're accessing it through API calls.

(Yes, technically you could train your own LLM from scratch. Just like you could build out your own internal cloud infrastructure so your teams could have access to elastic resources. You could. But the fact of the matter is that most companies using a cloud provider's infrastructure, or a genAI vendor's LLM, are doing so precisely because the DIY approach is out of reach. They either want or need to build on top of someone else's work.)

And here's the thing: there's nothing inherently "wrong" with using someone else's genAI model!

It's mostly a matter of understanding your risk/reward tradeoff. That means considering the unknowns:

- You don't know what went into that vendor's model. (Case in point: look at all of the "your LLM stole my content" lawsuits.)

- You don't know how internal, "minor" model updates will impact the apps you've built on top of it. (But you'll find out one day when your app starts misbehaving.)

- You could lose access to that model on a moment's notice. (Maybe the vendor raises prices, or sunsets a model you were using, or that company shuts down, or …)

For some business use cases, that's fine.

But if any of those unknowns cause you discomfort … it's time for a think.

Complex Machinery 019: Five types of magic

The latest issue of Complex Machinery: AI is magic. In various meanings of the word.

Getting to know that vendor's genAI model

You didn't build it yourself, so you have to take some things on faith.