What you see here is the last week's worth of links and quips I have shared on LinkedIn, from Monday through Sunday.

For now I'll post the notes as they appeared on LinkedIn, including hashtags and sentence fragments. Over time I might expand on these thoughts as they land here on my blog.

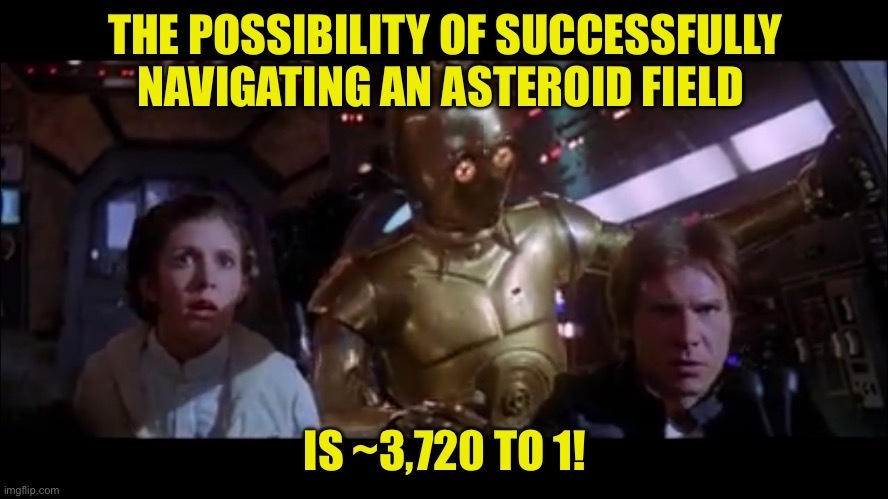

2023/03/20: Always tell me the odds

After last week's post on overconfident AI, I realize that the droids in the Star Wars universe are the kind of AI I want.

Consider C-3PO, for example:

- has a head full of knowledge, far beyond what any human brain can hold

- thanks to that knowledge, can do his job (translations) extremely well

- will let people know when a situation looks dicey

- openly complains when assigned a job he wasn't built to do

C-3PO and the other droids are purpose-built, task-focused, and know when they're operating out of their depth. This is the AI we need. Chatbots that emit truth and fiction with equal confidence? Not so much.

(Oh, and before anyone asks: yes, please, I want my AI to tell me the odds. Always.)

2023/03/21: Doubling down on an ineffective metric

I wrote a short post on workplace-surveillance software, and why it is a terrible way to measure an ineffective metric.

"When You Measure the Wrong Thing"

2023/03/22: AI is not a paint job

I was recently riffing on an idea with Noelle Saldana and she found the perfect way to summarize a point:

"Adding AI is not getting a new paint job; it's a full home remodel!"

She nailed it.

If you're serious about implementing AI in your company, then you can't treat it like a quick coat of paint. You need to find all the places in your organization where AI can provide some mix of "increase revenue," "decrease losses," "spot new opportunities," and "manage risk."

For more of Noelle's views on AI, check out her talk next week at the Data Council conference:

"Hot Takes and Tragic Mistakes: How (not) to Integrate Data People in Your App Dev Team Workflows" (March 30, 10AM CT)

2023/03/22: Order-to-cash

Great explanation of the order-to-cash (OTC) process in the linked article.

This is also an excellent risk management post in disguise: note how this traces the full process and identifies the various ways things can deviate from the preferred path. Lots of potential for even a seemingly "simple" process to go awry.

(A company can develop this knowledge through experience, or simulation, or anything else. The goal is to catch potential issues before your customers do.)

https://www.helloturbine.com/blog/order-to-cash-explained

2023/03/23: A picture burns a thousand trees

I wrote a little something about generated images and AI safety:

"When generated images take on a life of their own"

2023/03/24: Guardrails for AI

"We need to create guardrails for AI" (Financial Times)

Key excerpt:

For now, in lieu of either outlawing AI or having some perfect method of regulation, we might start by forcing companies to reveal what experiments they are doing, what’s worked, what hasn’t and where unintended consequences might be emerging. Transparency is the first step towards ensuring that AI doesn’t get the better of its makers.

When generated images take on a life of their own

A reminder of generative AI's chaotic potential

AI isn't something you just add to a company

If you want the most out of AI, you need to be strategic about how you employ it.