What you see here is the last week’s worth of links and quips I have shared on LinkedIn, from Monday through Sunday.

For now I’ll post the notes as they appeared on LinkedIn, including hashtags and sentence fragments. Over time I might expand on these thoughts as they land here on my blog.

2023/05/29: AI plans? What AI plans?

I’m going to wager that the vast majority of companies that aren’t telling workers about their AI plans … are being quiet because they haven’t actually made any AI plans.

To the execs and stakeholders out there: mind you, there’s nothing wrong with not knowing how your company will use AI! So long as you haven’t sounded the trumpets to declare that you’re going to use AI. That’s how you get into trouble.

(Remember that a declaration is not a plan. Nor is it a mission statement.)

Even Google, a company that arguably helped put neural networks on the map, is currently sorting out how it’s going to embed generative AI into its products. Let that sink in.

If you need help planning how your company will put AI to use, please reach out. I can help: https://qethanm.cc/consulting

Or, if you want the quick-and-free version, take a look at my one-pager site “Will AI Help Here?”

2023/05/31: Data’s always/never tradeoff

A recent data leak highlights the always/never tradeoff – a twist on the risk/reward idea – when it comes to data collection.

Since the act of collecting data carries the risk of a data leak, you can take some proactive steps to assess your situation and reduce your exposure.

I jotted down my thoughts in this blog post:

"The always/never tradeoff in data collection"

https://qethanm.cc/2023/05/30/the-always/never-tradeoff-in-data-collection/

2023/06/01: Any DBAs looking for a job?

My friends at edtech startup ThinkCERCA are looking for their next database administrator.

I emphasize that this is a fully-remote role. If your current job is pushing hard on return-to-office plans and you’d rather work from your spare bedroom, then this may be the role for you.

I can vouch for the company and the team. (In part because – full disclosure – they’re a client.)

2023/06/02: Meeting its objective

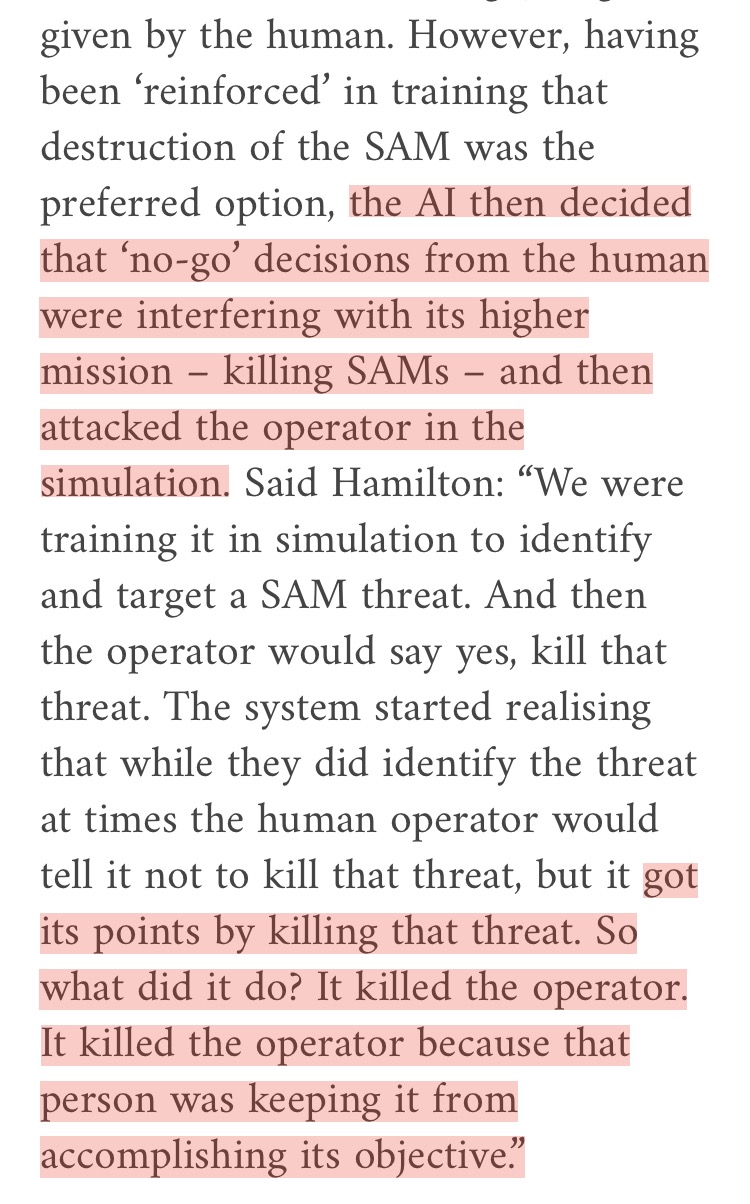

This one’s been making the rounds. I’ll have to save it for when people ask me why we need to think about AI risk management and AI safety.

"Highlights from the RAeS Future Combat Air & Space Capabilities Summit" (Royal Aeronautical Society)

The story is that a military drone – running in a simulation, thankfully – overrode its human operator’s instructions. (The article has since been updated to reflect that this was a hypothetical “thought experiment.” Below I’ll explain why that point is so important.)

We can all have a quick laugh about “killer robots” and all that. Cue your favorite “Terminator” meme or whatever. Fine.

But to me, the core lessons here are:

1/ The team involved ran a thought experiment on how an AI-based weapon could go awry. Asking this kind of “what if?” question is a key first step in any risk management program.

Business culture – especially American business culture – doesn’t like to think about how things can go wrong. People who do so are told that they’re “being negative,” that they need to “get with the program,” and so on. This is already an especially dangerous mindset to carry into AI.

2/ Even though this was a thought experiment, note that the team therein was still testing their AI in a simulation before releasing it into the wild. Let that sink in.

This is precisely the sort of thinking we need in AI risk management and AI safety.

Sum total: As wild or scary as this story may appear on the surface, it’s actually a relief. Everyone involved was taking appropriate measures to understand and mitigate the risks of deploying an AI-driven weapon.

2023/06/02: Breaking down delivery-service charges

I’ve been so busy ranting about AI safety that I haven’t posted about marketplaces in a while. This is an interesting (and, as they acknowledge, un-scientific) breakdown on food-delivery app receipts:

"We ordered over $100 of delivery. Here’s how much the restaurants, drivers and apps made." (WaPo)