(Photo by Tim Johnson on Unsplash )

The risks and rewards of using vendor APIs for generative AI models

I think there’s an understated and underappreciated difference between “building on open source libraries” and “building on APIs”:

Most open source licenses permit you to keep copies of the code, and even modify it to your liking. What if the maintainers introduce breaking changes into a new version? What if they shut down the project? While this is a problem for you, it’s not a pressing problem. You’re insulated from any changes in the project. The apps your company has built on that code will keep running, at least for now.

(This ability to keep using code even after a project’s demise was a key element in the origins of free/open-source software. But that’s a story for another day …)

When you build on APIs, by comparison, your app now depends on the provider’s continued existence. If they change their service offering, if they go under, or if one of your competitors buys them … your product could stop working overnight.

I’m thinking of this now in terms of the recent investigations and lawsuits levied against providers of generative AI tools, like OpenAI’s ChatGPT. They’re in hot water because their models’ training data allegedly includes content that was not theirs to use. According to a piece in the Financial Times:

[FTC Chair Lina Khan] said the regulator’s broader concerns involved ChatGPT and other AI services “being fed a huge trove of data” while there were “no checks on what type of data is being inserted into these companies”.

She added: “We’ve heard about reports where people’s sensitive information is showing up in response to an inquiry from somebody else. We’ve heard about libel, defamatory statements, flatly untrue things that are emerging. That’s the type of fraud and deception that we’re concerned about.”

I am neither a lawyer nor a regulator, so I’m not sure how all of this will turn out. What I do know is that this should serve as an eye-opener to any company that plans to build on third-party APIs. These generative AI models need training data in order to work. If someone is challenging the providers’ rights to that training data, then, they’re effectively challenging the very existence of the AI models that power these APIs.

What happens to your business if OpenAI changes its service offerings, or dramatically raises prices, or swaps in lower-quality training data in response to this legal action? (This is in addition to the questions you should have already asked about model risk and indemnification.)

If you don’t have answers, it’s time to figure them out.

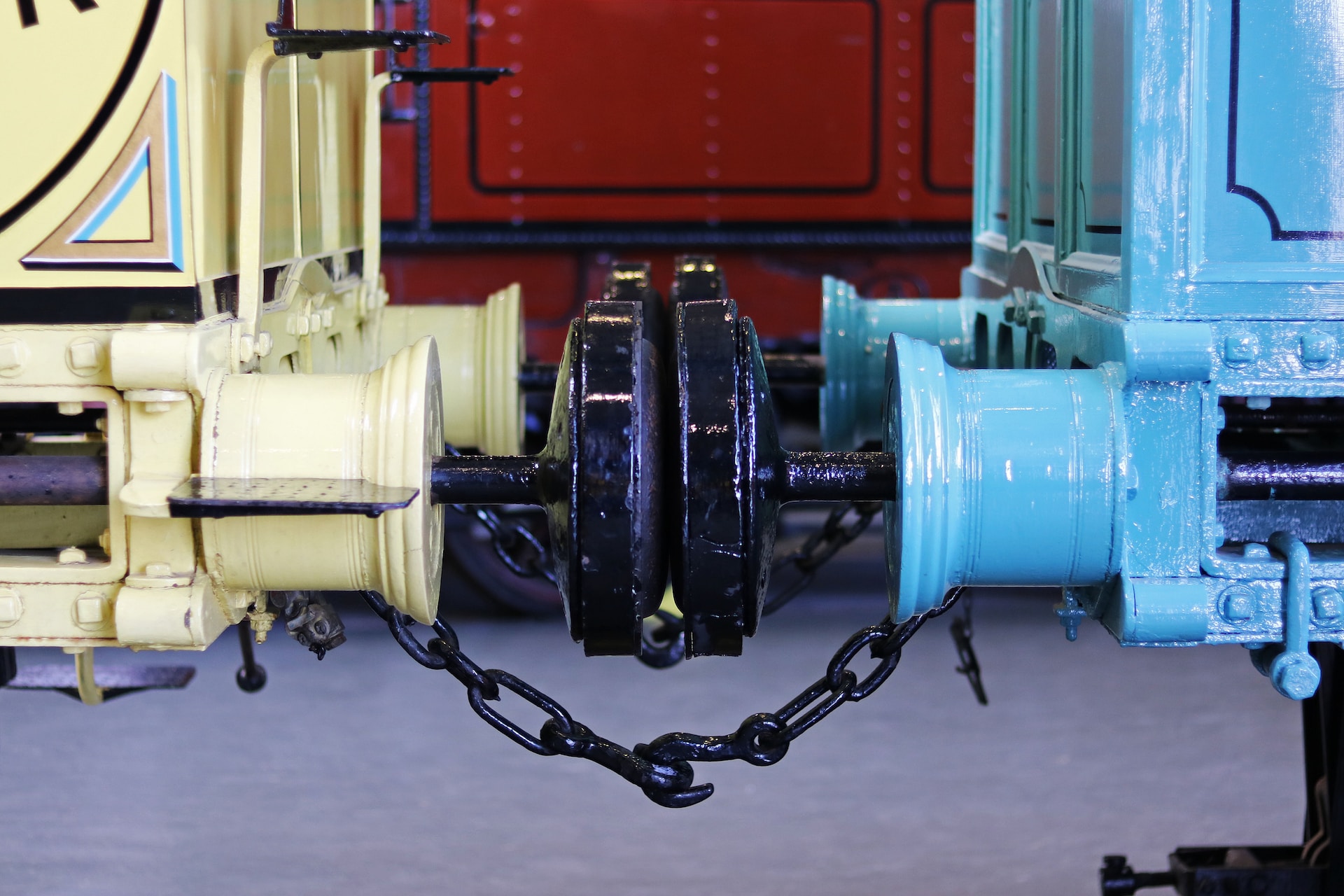

This isn’t to say that you shouldn’t use vendors’ APIs. Far from it. It’s a reminder that risk and reward go hand-in-hand, and that using APIs exposes you to a special kind of third-party risk. The reward is that you don’t have to build something from scratch. You get to embed functionality into your product, right now, with the swipe of a credit card and a few lines of code. The associated risk is that your product is now chained to the API provider’s fate.

So long as you understand these risks and have developed a mitigation strategy, you should be in good shape.